ServiceNow Integration & Non-Production FinOps Policies

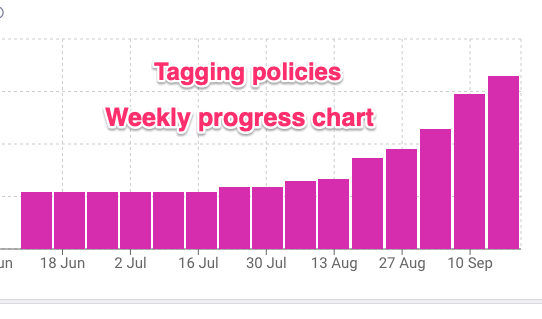

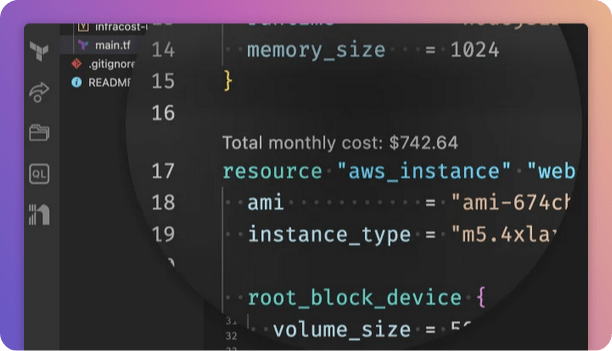

Happy August! Summer may be in full swing, but our team has been hard at work in between recharging on vacations. I’m excited to announce the release of major product updates all focused on helping enterprises with FinOps tagging 🏷️ API for ServiceNow integration Large enterprises are familiar with this FinOps challenge: cloud resources need…